Master's Thesis

April 2009

Abstract

This thesis deals with design and implementation of a library providing object-relational mapping services for programs written in C++. Emphasis is put on its transparency and ease of its use. To achieve these goals the library uses GCCXML, a XML output extension to GCC. GCCXML helps the library to get description of the class model used in the user application and to simulate the reflection.

For the mapping purposes, new object-relational database features are discussed and a new mapping type is proposed.

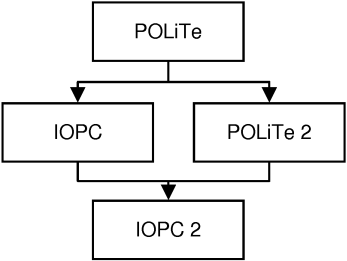

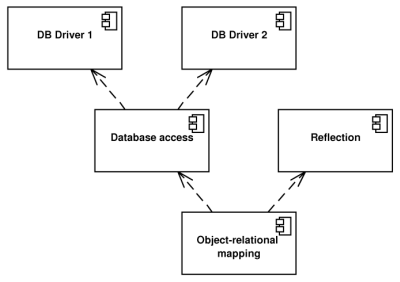

Implementation of the library is based on three related projects - the POLiTe, POLiTe 2 and IOPC libraries. The proposed and implemented library unifies them into one solid, flexible and extensible platform. Thanks to its modular architecture, the resulting library can be used in several configurations providing subsets of implemented services - database access, reflection and object-relational mapping.

Table of Contents

- 1. Introduction

- 2. Persistence layer requirements

- 3. Evolution of the IOPC 2 library

- 4. Basic concepts of the IOPC 2 library

- 5. Architecture of the IOPC 2 library

- 6. Conclusion

- References

- A. User's guide

- B. Sample schema SQL scripts

- C. Metadata overview

- D. DVD content

List of Figures

- 1.1. IOPC 2 evolution

- 2.1. Example class hierarchy

- 2.2. Vertical mapping tables

- 2.3. Horizontal mapping tables

- 2.4. Filtered mapping tables

- 2.5. Combined mapping tables

- 2.6. Proposed architecture of the O/R mapping library

- 3.1. Architecture of the POLiTe library

- 3.2. POLiTe persistent object states

- 3.3. POLiTe references

- 3.4. Architecture of the POLiTe 2 library

- 3.5. Caches in the POLiTe 2 library

- 3.6. States of the POLiTe 2 objects

- 3.7. Dereferencing DbPtr in POLiTe 2

- 3.8. The IOPC library workflow

- 3.9. POLiTe library components used in IOPC LIB

- 3.10. Structure of the IOPC LIB

- 4.1. Reflection using the GCCXML

- 4.2. IOPC 2 base classes

- 4.3. SQL schema generated from classes using combined mapping

- 4.4. Top-level part of the object-relational mapping algorithm

- 4.5. Description of the Insert_Row method. Not used for ADT mapping.

- 4.6. Inserting objects using filtered mapping

- 4.7. Iterative loading algorithm

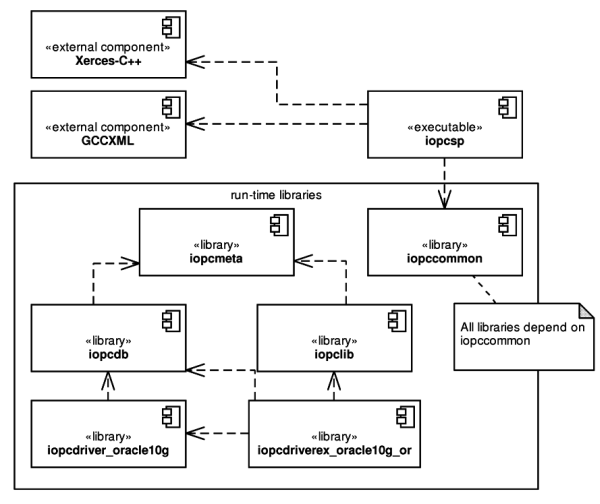

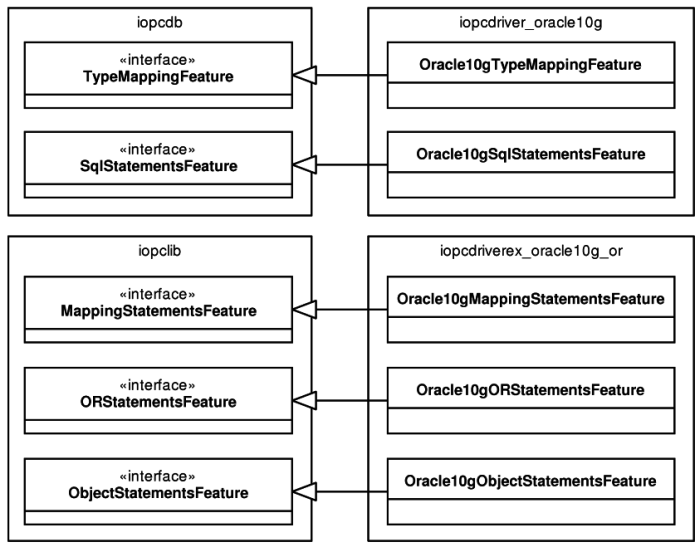

- 5.1. Overview of the IOPC 2 architecture

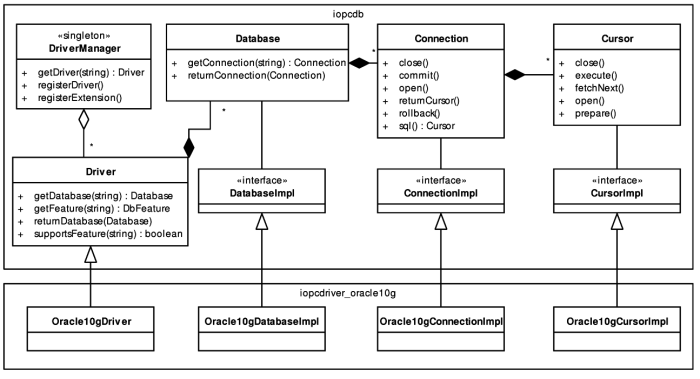

- 5.2. Basic classes of the database layer

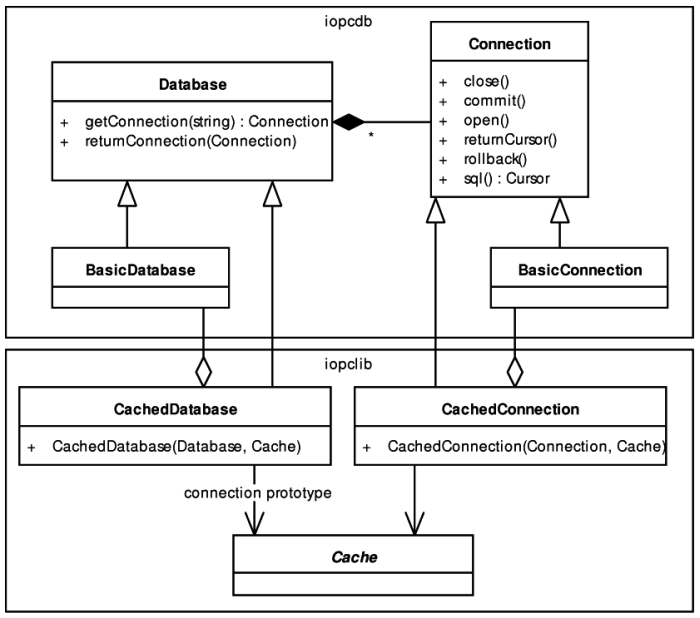

- 5.3. Using the decorator pattern

- 5.4. Oracle 10g database driver extensions and driver features

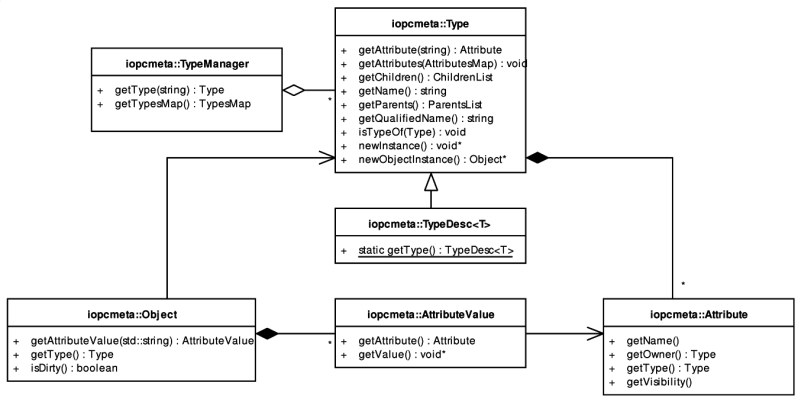

- 5.5. The iopcmeta classes

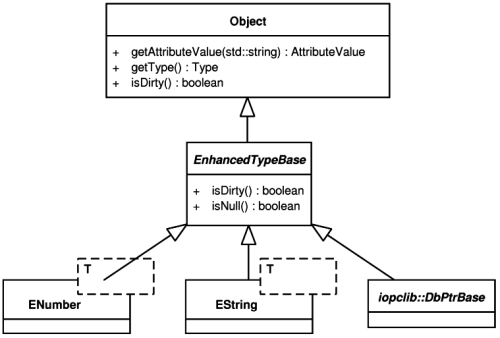

- 5.6. Structure of the enhanced data type classes

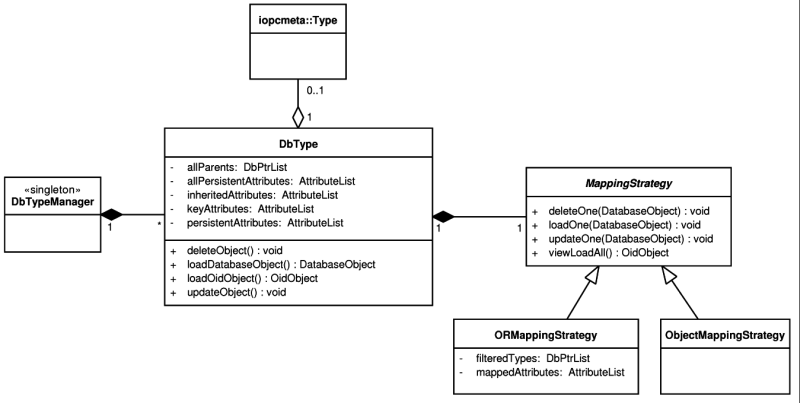

- 5.7. Classes involved in the database mapping process

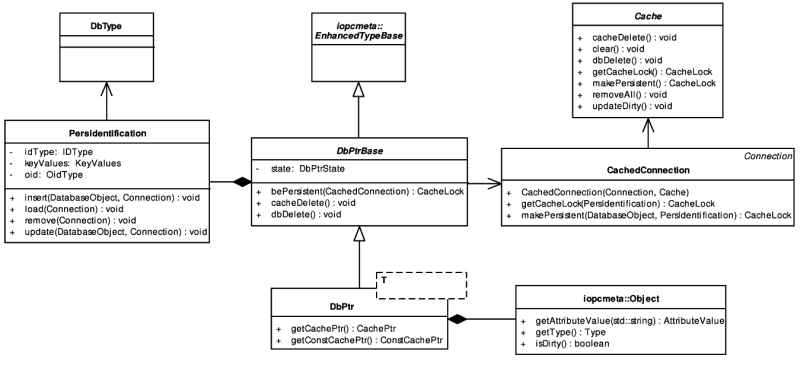

- 5.8. Classes manipulating with persistent objects

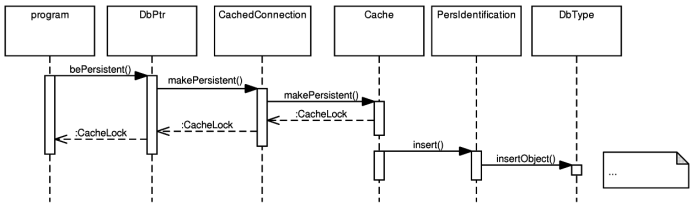

- 5.9. The bePersistent operation

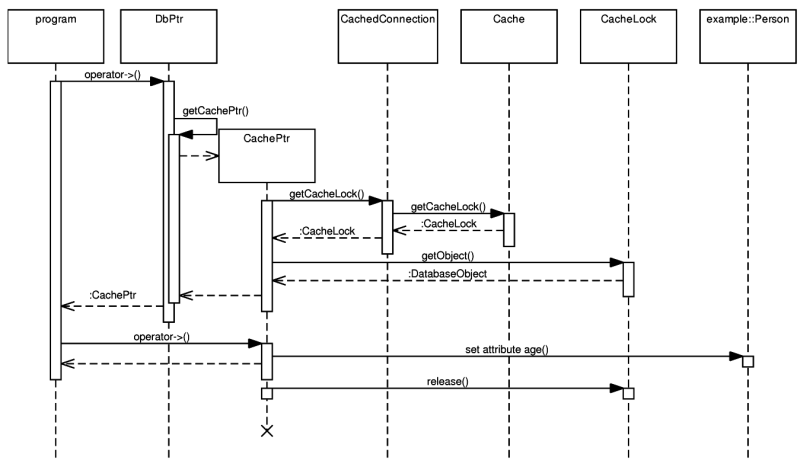

- 5.10. Database pointer and cache pointer interaction

- 5.11. Interaction with the O/R mapping services

- 5.12. Basic classes of the cache layer. VoidCache architecture.

- 5.13. Extended interface of the cache layer.

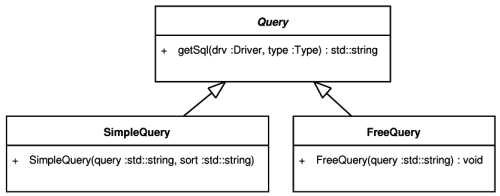

- 5.14. The Query classes

List of Examples

- 2.1. Using object types in Oracle

- 2.2. Accessing objects in Oracle

- 2.3. Nested tables in Oracle

- 2.4. References in Oracle

- 2.5. A polymorphic query using object-relational features of the Oracle database.

- 3.1. Definition of a class in the POLiTe library

- 3.2. Associations in the POLiTe library

- 3.3. Persistent object manipulation

- 3.4. Queries in the POLiTe library

- 3.5. Persistent object manipulation in the POLiTe 2 library

- 3.6. Executing queries in the POLiTe 2 library

- 3.7. Definition of persistent classes in IOPC

- 3.8. XML metamodel description file

- 4.1. Basic persistent class definition in IOPC 2

- 4.2. GCCXML output

- 4.3. IOPC SP output

- 4.4. DatabaseObject usage

- 4.5. SQL schema generated for DatabaseObject subclasses.

- 4.6. SQL schema generated for OidObject subclasses.

- 4.7. Using the ADT mapping.

- 4.8. SQL schema from classes that use ADT mapping.

- 4.9. Loading objects using ADTs from an Oracle database

- 5.1. Connecting to an Oracle database in IOPC 2

- 5.2. Type traits

- 5.3. Using the reflection interface

- 5.4. Enhanced datatype usage

- 5.5. Java annotations and C# attributes

- 5.6. Example usage of metadata in the IOPC 2 library

- 5.7. Friend type description declaration

- 5.8. Using the ScriptsGenerator to create required database schema.

- 5.9. Using the bePersistent method

- 5.10. Example query in IOPC 2

Today, object-oriented languages represent standard instruments for business application and information system development. These systems usually operate with large amounts of persistent data stored in relational database management systems (RDBMS). Data in RDBMSs are however represented differently from data in the application layer. Developers need to do a lot of programming overhead to deal with this so-called impedance mismatch every time they want to move data between relational databases and application-level object models.

Object-oriented database management systems (OODBMS) try to mitigate the impedance mismatch for example by providing navigation using pointers instead of using joins as in the relational databases. Despite their advantages the object-oriented databases are not as widely used as the relational databases. Mostly because of the lack of various tools like reporting, or OLAP[1] and due to the industry standards pushed by the big players - Oracle, Microsoft and IBM. Moreover many of RDBMS creators already addressed the impedance mismatch issue by incorporating object-oriented features into their products. Doing it a new kind of database management system, object-relational database management systems (ORDBMS), were created.

Another approach to bypassing the impedance mismatch is to isolate the developer from direct database data manipulation at application level. This goal can be accomplished by using an object-relational (O/R) mapping layer. This layer transparently maps relational data into application object model and vice versa. The O/R tools usually offer additional services like object querying or caching.

Current information systems are often written in high-level languages like Java or C#. There exist established O/R mapping tools for these environments. Well-known is Hibernate[2] for Java and nHibernate[3] relatively new ADO.NET Entity Framework from Microsoft[4] for C#.

O/R mapping tools for such languages can use their feature called reflection. Reflection allows a program to find out information about its own data model and to modify it at run-time. The O/R mapping layer then can easily inspect the structure of the classes being mapped, and based on this information, it can transparently load or save data from/to the underlying database. Languages that support reflection are often referred to as reflective languages.

Another frequently used language in this area is C++. Unfortunately, C++ is not a reflective language, so the building of O/R mapping layer is a bit more difficult. The goal of this thesis is to develop such a mapping library, which should work as transparently as possible. Second goal is to examine possibilities the ORDBMSs can provide to an O/R mapping library.

The resulting library implemented as part of this thesis uses advantages of three previous projects. Their common predecessor, the POLiTe library[5], was developed as a part of doctoral thesis [02]. Two follow-ups came after this work as master theses focusing on different areas of the O/R mapping concept:

-

Master thesis [03] (called POLiTe 2 in further text) addressed mainly the performance and notably enhanced functionality of the object cache. It also added multithreading support and made the interface of the library safer to use.

-

Master thesis [04] (IOPC[6]) designed a new persistence layer. The main advantage of this new layer is transparent application development without the need to additionally describe classes in it. It uses OpenC++ source-to-source translator to analyze and prepare the source code for object-relational mapping. Even though brand-new interface was created, the library still supports classes written in the POLiTe-style.

The IOPC 2 library provided in this thesis not only merges the development back into one product offering most of previously implemented features without their drawbacks. Furthermore, the library implements new ideas like standalone reflection mechanism, additional mapping type using object-relational database abilities and many other.

In the following section we will introduce basic concepts of the object-relational mapping, discuss new features of ORDBMSs and describe requirements and goals of the IOPC 2 implementation. Then, in the third chapter, evolution of the IOPC/POLiTe libraries will be presented in the context of requirements placed and their features will be compared with each other. Chapter 4, Basic concepts of the IOPC 2 library describes basic concepts of the IOPC 2 implementation whereas Chapter 5, Architecture of the IOPC 2 library contains detailed architectural information. In conclusion we will evaluate the achievements of this thesis and will propose areas for further development. Appendix contains user guide.

Enclosed DVD contains library source code with examples, binary distribution for Linux, documentation and in the first place a VMWare image with pre-installed environment. The image contains Ubuntu Linux, freely distributable Oracle XE database, GCCXML and all other IOPC 2 dependencies. The library source code and source code of the examples is stored in the image as an Eclipse CDT project. So all the examples can be modified, compiled and run right away.

Table of Contents

Sections in this chapter analyse the requirements that may be imposed on a O/R mapping library and that were taken into account during the design and implementation phases of development.

In the world of object-oriented programming object identity represents an object property that helps to distinguish objects from each other. Even if two distinct objects have same values of all their attributes and so their inner state is identical, they are still different instances with different identity. A reference to an object is a closely related term to the identity as it uses this identity to describe the object it is referring to.

If we consider entries in a relational table as objects, the identity of these objects could be based on any key in the table - usually the primary key. Persistence layer would then use such a key as a description of object identity on the application level.

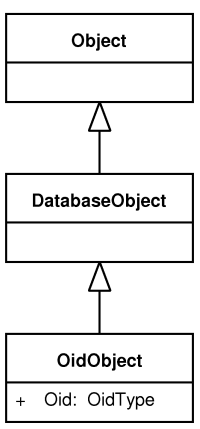

In object-oriented systems, where database is used only as a mere storage of the object model and/or the design focuses on the application-tier, an object identifier (OID) approach is used. OID is a name for a special table column and for corresponding class attribute which has no business meaning. On the application level it is usually hidden from users or application developers. OID contains an identifier, usually a number, UUID[7] or a list of numbers, which is unique for each persistent object within the database scope. Object OID never changes during its lifetime. OID is mapped into database tables as a surrogate key. Instances of classes containing an OID attribute are called OID objects further in the text.

Another approach is needed for systems built upon an existing database, for systems where the use of natural, not surrogate, keys is required or for systems with read-only or no-schema-changes-allowed databases. The object-relational layer should be able to absorb identity of persistent objects from keys (even multi-column keys) in existing schema. We will call such objects as database objects.

In several cases, we may want the object-relational layer to manipulate objects without any identity. These objects may represent results of aggregation queries, rows from non-updateable views or rows from tables without any keys. Transience is an important feature of these objects: their modified state cannot be stored back to the underlying database - origin of the data they contain may not even be traceable back to particular row or particular table.

The all-purpose O/R mapping layer should support OID objects as well as database objects and even transient objects for query results.

Persistence layer considered in the context of this thesis should be able to manipulate persistent instances of certain classes. These classes are called persistent classes, its instances persistent objects. Storing objects to database implies that the layer should store their attribute values to underlying database structures. Because all predecessors of this thesis used relational database systems as their persistent storage, let's focus first on this area first.

Persistent classes would be represented as tables and their attributes as their columns. Instances of persistent classes would be inserted into these tables as rows containing instance attribute values associated with corresponding table columns. Basic requirements on a persistence library could be:

-

Ability to associate persistent classes with database tables

-

Ability to map attributes of these classes on columns in associated tables. This means that the layer should be able to store attributes of certain C++ types (basic numeric types, strings) into the database as column values.

Classes can also contain attributes of structured types or collections, which are often mapped into separate tables or split into more columns in the relational model.

Last attribute type to be discussed is an association (C++ pointer/reference). Association can be modelled using foreign key relationship between matching tables. The persistence layer should be able to handle single associations as well as collections of associations.

-

An optional requirement may be an ability to generate required database schema in form of a SQL create (or drop) script. The persistence layer may require its own structures in the underlying database or it may be able to operate upon existing database schema.

-

Ability to query subsets of object model content. The layer should provide a query language that would abstract from the physical representation of the object model in the database.

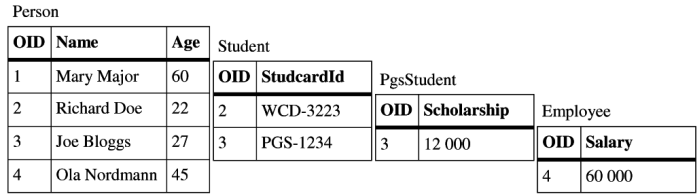

Up to now we have considered only single classes without inheritance relations. However, in C++ classes can form complex inheritance hierarchies and it is a natural requirement to be able to store descendants of persistent classes too. There are several ways how to store these hierarchies into a relational database.Figure 2.1, “Example class hierarchy” contains an example hierarchy on which we will demonstrate these mapping types. This hierarchy will also be used and modified further in the text.

Vertical mapping is a most common (and natural) way of mapping attributes of persistent classes in an inheritance hierarchy into tables in a relational database. Each class in this hierarchy has one associated table in the database. Values from attributes declared in correspondent classes only are stored into these tables. This means that attributes declared in current class are mapped into its associated table, attributes from parent class are mapped into its "parent" table etc. Storing one object invokes a cascade of database inserts. Similar rules apply for updates, deletes and selects. However, selects can be simplified by using table joins and database views. This solution offers good performance for shallow hierarchies, which is getting worse with the inheritance graph getting deeper. It is a best choice for scenarios where polymorphism does matter - by querying one table we easily get instances of associated class and its descendants.

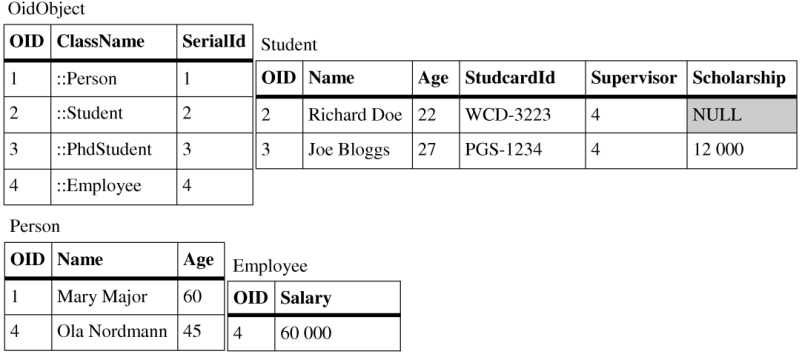

Let's consider following instances of the classes from Figure 2.1, “Example class hierarchy”:

Student(name: "Richard Doe", age: 22, studcardid: "WCD-3223") PhdStudent(name: "Joe Bloggs", age: 27, studcardid: "PHD-1234", scholarship: 12000) Employee(name: "Ola Nordmann", age: 45, salary: 60000) Person(name: "Mary Major", age: 60)

Vertical mapping will spread data from these instances into four tables as illustrated in the Figure 2.2, “Vertical mapping tables”. The tables are arranged so that attribute values belonging to one particular class are displayed in the same row. To be able to join data from the tables we use surrogate OID as explained in the previous chapter. All rows belonging to one particular object are assigned the same OID.

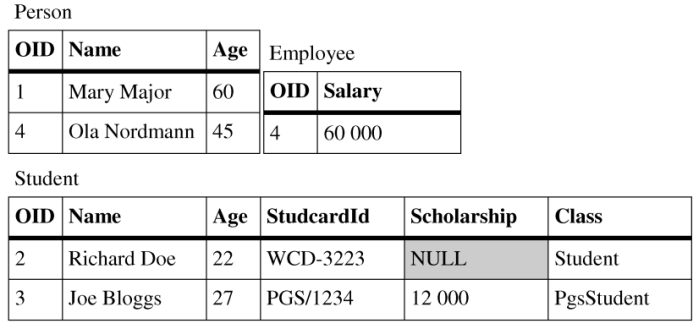

Horizontal mapping offers better performance for scenarios where we don't need polymorphic queries - accessing descendants of specific class. Again, each persistent class in a hierarchy has one associated table into which its instances store their attributes. The difference from vertical mapping is that these tables contain even attributes inherited from parent persistent classes. Rows in these tables contain enough information to load complete persistent class instances, thus no cascade operations are needed. Every instance of horizontally mapped class is mapped only into one table row in the database.

As you can see in Figure 2.3, “Horizontal mapping tables” - queries using polymorphism can be very hard to perform. Finding a specific object of Person type or its descendants involves looking into all the tables. However, opposite to the vertical mapping, if we work with objects of specific type (not including descendants), we don't need any joins in select statements or cascade inserts/updates.

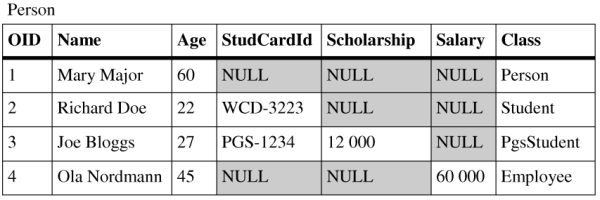

Filtered mapping assigns only one database table to all

persistent classes in one inheritance hierarchy - see Figure 2.4, “Filtered mapping tables”.

This table contains columns that represent all attributes from all classes in that

hierarchy. Filtered mapping doesn't suffer from disadvantages of two previous approaches

- it performs very well on polymorphic data and doesn't involve cascaded operations/joins.

A disadvantage of this approach is excessive storage requirement. Most of the rows

in the table will contain empty cells in columns that belong to attributes from

descendants or from classes not being in ancestor relationship of the matching class

(for example the column Scholarship in

the "Ola Nordmann" row. Second thing is that it is necessary to add a column telling

us which class the rows belong to. As we have all rows in one table there is no

other easy way how to distinguish the instance types.

Combined mapping is a combination of mappings mentioned above. It allows users to use all kinds of mappings in one inheritance hierarchy. Combined mapping is the most sophisticated variation that allows users to specify these mappings according to their needs. It is also quite complex for implementation, it has some constraints how the mapping types can be used and it is not well maintainable on the database side. Database structures created for combined mapping would require nontrivial constraints if their content was modified other way than using the persistence layer that created the structures. The persistence layer should hide this complexity beyond views to provide at least convenient read-only access. To see how the combined mapping can be used refer to Figure 2.5, “Combined mapping tables”.

The Employee class uses vertical mapping, the Student class uses horizontal mapping and the

PhdStudent class uses filtered mapping. Classes that use filtered

mapping can choose into which of their ancestors thir attributes will be mapped.

Class PhdStudent is mapped to the table belonging

into the Student class.

Object-relational databases offer higher level of abstraction over the problem domain. They extend relational databases with object-oriented features to minimise the gap between relational and object representation of application data, known as the impedance mismatch problem. For detailed information about user defined types and other features of object-relational databases refer to [05], [06]. This area is introduced by an 1999 revision of the ISO/IEC 9075 family of standards, often referred to as SQL3 or SQL: 1999. Because the level of implementation of the standard varies between available products, you may need to see their manuals too. For Oracle 10g refer to [07]. Main features of the object-relational databases are summarised below.

User-defined types are custom data types which can be created by users using the new features of object-relational database systems. These types are used in table definitions the same way as built-in types like NUMBER or VARCHAR. There are several kinds of UDTs - for example - distinct (derived) types, named row types, and most importantly the abstract data types (ADT), which we will focus on in the following paragraphs.

ADT is a structured, user defined type defined by specifying a set of attributes and operations much in a similar way to object-oriented languages like C++ or Java. Attributes define the value of the type and operations its behaviour. ADTs can be inherited from other abstract data types (in terms of object-oriented programming) and can create type hierarchies. These hierarchies can reflect the structure of data objects defined in application-tier modules. Instances of ADTs are called objects and can be persisted in database tables. See Example 2.1, “Using object types in Oracle” for an illustration how these types are defined and used in Oracle ORDBMS.

Example 2.1. Using object types in Oracle

-- type definitions

CREATE TYPE TPerson AS OBJECT (

name VARCHAR2(50),

age NUMBER(3)

) NOT FINAL;

CREATE TYPE TStudent UNDER TPerson(

studcardid VARCHAR2(20)

) NOT FINAL;

CREATE TYPE TPhdStudent UNDER TStudent(

scholarship NUMBER(10)

) NOT FINAL;

CREATE TYPE TEmployee UNDER TPerson(

salary NUMBER(10)

) NOT FINAL;

-- storage

CREATE TABLE Person OF TPerson;

-- fill it with data

INSERT INTO Person VALUES(

TPerson('Mary Major', 60)

);

INSERT INTO Person VALUES(

TStudent('Richard Doe', 22, 'WCD-3223')

);

INSERT INTO Person VALUES(

TPhdStudent('Joe Bloggs', 27, 'PHD-1234', 12000)

);

INSERT INTO Person VALUES(

TEmployee('Ola Nordmann', 45, 60000)

);

First, the supertype TPerson and its descendants TStudent, TPhdStudent and TEmployee are defined. The NOT FINAL keyword allows us to create subtypes of given types. Then a physical storage table Person is created. This table can hold not only instances of the TPerson type but also instances of its descendants. Accessing these instances is demonstrated in the following Example 2.2, “Accessing objects in Oracle”.

Example 2.2. Accessing objects in Oracle

SELECT VALUE(x) FROM Person x;

-- returns:

TPERSON('Mary Major', 60)

TSTUDENT('Richard Doe', 22, 'WCD-3223')

TPHDSTUDENT('Joe Bloggs', 27, 'PHD-1234', 12000)

TEMPLOYEE('Ola Nordmann', 45, 60000)

-- accessing descendant attributes:

SELECT

TREAT(VALUE(x) AS TStudent).studcardid AS studcardid

FROM Person x

WHERE VALUE(x) IS OF (TStudent);

-- returns:

WCD-3223

PHD-1234

The first select lists all objects stored in the Person table. Second select lists all student card IDs of all student objects that are stored in the table.

Nested tables. Nested tables violate the first normal form in a way that they allow the standard relational tables to have non-atomic attributes. Attribute can be represented by an atomic value or by a relation. Example 2.3, “Nested tables in Oracle” illustrates how to create and use nested tables in Oracle database system. The example modifies the Person type by adding a list of phone numbers to it. Interesting is the last step in which we perform a SELECT on the nested table. To retrieve the content of the nested table in a relational form, the nested table has to be unnested using the TABLE expression. The unnested table is then joined with the row that contains the nested table.

Example 2.3. Nested tables in Oracle

-- a type representing one phone number

CREATE TYPE TPhone AS OBJECT (

num VARCHAR2(20),

type CHAR(1)

);

-- a type representing a list of phone numbers

CREATE TYPE TPhones AS TABLE OF TPhone;

-- the modified TPerson type

CREATE TYPE TPerson AS OBJECT (

name VARCHAR2(50),

age NUMBER(3),

phones TPhones

) NOT FINAL;

-- storage

CREATE TABLE Person OF TPerson

NESTED TABLE phones STORE AS PhonesTable;

-- fill it with data

INSERT INTO PERSON VALUES(

TPerson('Mary Major', 60, TPhones(

TPhone('123-456-789', 'W'),

TPhone('987-654-321', 'H')

) ) );

-- obtaining a list of phones of a particular person

SELECT y.num, y.type

FROM Person x, TABLE(x.phones) y

WHERE x.name = 'Mary Major';

-- returns rows:

123-456-789 W

987-654-321 H

Please note, that the nested table

PhonesTable in

Example 2.3, “Nested tables in Oracle” may need an index on an implicit

hidden column nested_table_id to prevent

full table scans on it.

Collection types. SQL3 defines also other collection types like sets, lists or multisets. In addition to nested tables, Oracle implements the VARRAY construct which represents an ordered set (list). The main difference is that VARRAY collection is stored as a raw value directly in the table or as a BLOB[8], whereas nested table values are stored in separate relational tables.

Reference types. We can think of the database references

as of pointers in the C/C++ languages. References model the associations among objects.

They reduce the need for foreign keys - users can navigate to associated objects

through the reference. In the following Example 2.4, “References in Oracle”

we will add a new subtype TEmployee and modify the TStudent type from previous examples by adding a reference

to the student's supervisor, which is an employee, to it. Note that we need to cast

the reference type REF(x) to REF TEmployee in the

INSERT statement because REF(x) refers to the base type TPerson.

Example 2.4. References in Oracle

-- type definitions

CREATE TYPE TPerson AS OBJECT (

name VARCHAR2(50),

age NUMBER(3)

) NOT FINAL;

CREATE TYPE TEmployee UNDER TPerson(

salary NUMBER(10)

);

CREATE TYPE TStudent UNDER TPerson(

studcardid VARCHAR2(20),

supervisor REF TEmployee

);

-- storage

CREATE TABLE Person OF TPerson;

-- insert an employee into the Person table

INSERT INTO Person VALUES(TEmployee('Ola Nordmann', 45, 60000))

-- insert a student with a reference to his supervisor

INSERT INTO Person

SELECT TStudent('Richard Doe', 22, 'WCD-3223',

TREAT(REF(x) AS REF TEmployee)))

FROM Person x

WHERE x.name = 'Ola Nordmann';

-- select all students with their supervisors

-- dereferencing uses dot notation

SELECT x.name, TREAT(value(x) as TStudent).supervisor.name

FROM Person x

WHERE VALUE(x) IS OF (TStudent);

-- returns a row:

Richard Doe, Ola Nordmann

As we already know about the object-relational databases, These new features of object-relational databases can be used to enhance functionality of described mapping types. They can also be a basis for a new mapping type that will entirely depend on the use of ORDBMS. First, let's have a look how the attribute mapping can be improved:

-

Collections (C++ containers) can be mapped into single columns as nested tables or instances of one of the SQL3 collection data types.

-

Structured attributes (C++ struct or class) can be mapped into single columns as instances of SQL3 structured data types.

-

Associations (C++ pointers or references) can be mapped as SQL3 references.

Second, let's discuss the mapping of classes and inheritance hierarchies. It is quite obvious that user-defined types can be used for this task. Abstract data types can be created for each class in the inheritance hierarchy by copying its inheritance graph. Instances of the types can be then inserted into one table. The earlier presented Example 2.1, “Using object types in Oracle” displays structures that may be generated for classes from Figure 2.1, “Example class hierarchy” using such kind of database mapping.

This type of mapping is referred to as ADT mapping in this thesis[9]. Its benefit is that it moves most of the responsibilities of the persistence layer to the underlying database system. For example obtaining a list of fully-loaded instances of specific type and its descendants involves several joins in the vertical mapping (filtered mappings or other variations using the combined mapping). This must be "planned" by the persistence layer. All such polymorphic queries are best performed using the ADT mapping (see the Example 2.5, “A polymorphic query using object-relational features of the Oracle database.”), as all these tasks can be accomplished only using the user-defined types and SQL3 queries or statements.

Example 2.5. A polymorphic query using object-relational features of the Oracle database.

SELECT name, age, TREAT(VALUE(x) AS TPhdStudent).studcardid AS studcardid, TREAT(VALUE(x) as TPhdStudent).scholarship as scholarship FROM Person x WHERE VALUE(x) IS OF (TPhdStudent);

A serious problem for a persistence layer using the ADT mapping is that there are major differences between database systems in the object-relational area. For example DB2 does not offer any type similar to the Oracle VARRAY data type. Or another example - in DB2 you have to create whole table hierarchy for inherited object types - much like as you would when creating storage structures for a vertically-mapped data type. These issues imply that the persistence layer should be flexible and modular enough to be able to support different database systems.

Another problem is multiple inheritance of ADTs. Although SQL3 standard supports multiple inheritance, it is not implemented neither in the current version of Oracle nor in the current version of DB2.

Object-relational systems allow users usually to load data in two ways. Either by traversing the model of persistent objects through associations and letting the persistence layer to load missing data into referenced local copies or by exploiting the ability of the underlying DBMS to execute SQL queries against the data stored in it. The persistence layer can provide direct access to the database by allowing its users to run SQL queries on tables or views the layer generated. This approach is not very user friendly as it requires the users to know the internals of database mapping performed by the persistence layer. The layer should therefore offer its own query language which will hide the complexity of the database structures. Queries in such language can be passed as character strings or as objects which represent attributes, values, comparison criteria etc. Depending on the implementation, users may filter only objects of one inheritance hierarchy by their attribute values, perform polymorphic queries returning objects of specified type and its descendants or they may query associations between objects. This queries represented in natural language would be:

-

Find all students older than 26. (Age >=26)

-

Find all students including Ph.D. students. (Actually all queries may be modified to include Ph.D. students).

-

Find all students which are supervised by Mrs. Ola Nordmann. (Using the modified model from Example 2.4, “References in Oracle”)

The role of caching is to speed up applications that use persistent objects by delaying database mapping operations. This is generally achieved by taking ownership of these objects when they are not currently in use by the user application. If the user applications needs an already released object again, the caching facility[10] looks it up in its catalogue and if found, returns it to the user application, saving the time-consuming database operations. The database operations for storing, updating or loading persistent objects are controlled by the cache layer, not by the user application. The layer is therefore responsible for creating and destroying persistent object instances.

Users of the persistence layer may want to examine the structure of persistent classes at run-time. This is not of a big issue in reflective languages like Java or C#, but in the C++, which is not reflective language, this requirement may pose a problem. Yet not necessarily, because the persistence layer must know about the structure of classes it is mapping to database. So, it only depends on the particular implementation of a C++ O/R mapping library whether it provides access to this information and how.

If the library puts these introspection features behind a unified interface and allows to inspect wider set of classes than only the persistent classes, it may provide at least simpler alternative to reflection features offered by reflective languages. This may be a big advantage, because developers tend to include O/R mapping features into their application frameworks and O/R mapping is often one of the pillars of infrastructural part of business applications. Therefore it reduces the need for other reflection library.

Based on the discussion in previous sections, we are able to specify three relatively autonomous areas a C++ persistence library should cover:

- Database access. The library should not be database dependent. To achieve this goal, the database access must be virtualised by providing an interface to other parts of the library which will hide the differences between databases the users may use. The library should contain modules called database drivers translating and dispatching requests from the interface to concrete database instances. Database drivers should be separate modules allowing users to select between them without the need to recompile the whole library. The architecture should be flexible enough to be able to handle relational as well as object-relational database systems. It would be also nice if the whole database access infrastructure was a stand-alone module as the discussed database interface could be used as a database access library.

- Reflection. If the reflection capabilities, as described in the previous section, were provided as a stand-alone module, the library could be used in a reflection library configuration.

- Object-relational mapping. The complete O/R mapping library would need both, the database access and reflection configurations: The reflection to inspect the structure of the persistent classes and the database access interface to load and store them from/to a database. The library should provide a module which will manage and perform the O/R mapping including related tasks as caching and querying. This O/R mapping module will depend on the previous two modules.

In this chapter, we analyzed basic aspects of an O/R mapping library implementation. Based on this analysis, we can summarise the requirements on the library. Some of the requirements are required while some are optional. We list them for further reference.

-

Common O/R mapping requirements

-

Ability to associate persistent classes with database tables

-

Ability to map attributes of persistent classes to database columns or attributes in instances of user-defined types

-

-

Ability to use at least one type of O/R mapping (better all of them):

-

Horizontal

-

Vertical

-

Filtered

-

Object

-

Combination of the mapping types

-

-

Ability to persist references between objects

-

Ability to persist collections of objects

-

Ability to generate required database schema or ability to work with an existing unmodifiable database schema

-

Querying in the object model context.

-

Object caching.

-

Reflection

-

Modular library architecture

Table of Contents

The following paragraphs outline design and functionality of the IOPC 2 library predecessors.

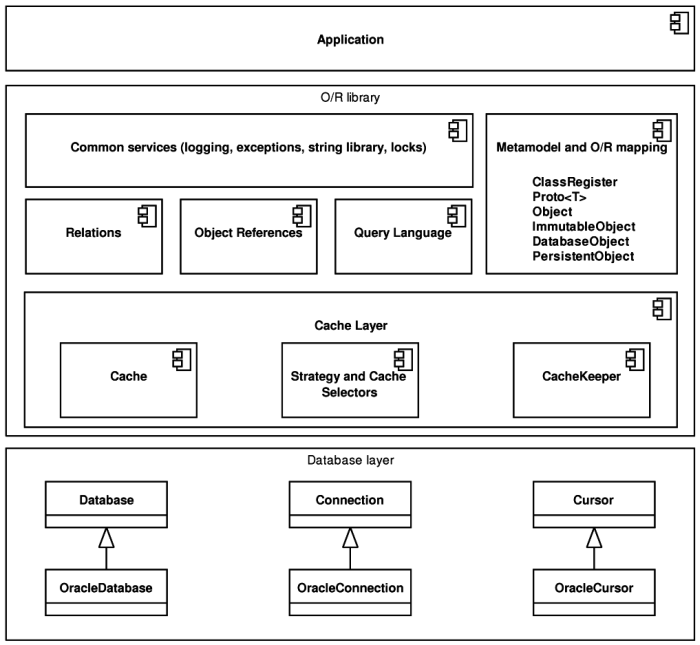

The common predecessor of IOPC, IOPC 2 and POLiTe 2 libraries - POLiTe - represents a persistence layer for C++ applications. The library itself is written in C++. Applications incorporate the library by including its header files and by linking its object code. The library offers following features:

-

Persistence of C++ objects derived from specific built-in base classes. Class hierarchies are mapped vertically.

-

Persistence of all simple numeric types and C strings (char*).

-

Query language for querying persistent objects.

-

Associations between persistent objects. Ability to combine more associations to manipulate indirectly associated instances.

-

Simple database access.

-

Common services like logging or locking.

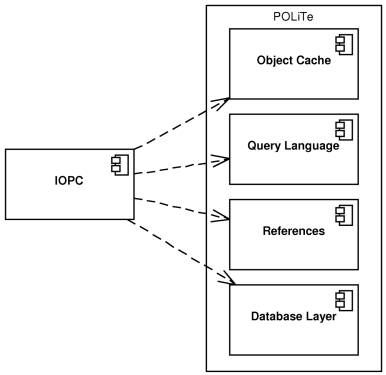

Even though the library can be divided to several functional units, it compiles as one shared library. The architecture of the library is outlined in the Figure 3.1, “Architecture of the POLiTe library”. The main functional units are discussed in the following sections.

POLiTe contains several classes that provide database access. At the time, the library supports the Oracle 7 database and the code uses OCI[11] 7 interface to access it. The classes are accessed via common interface that can be used for implementing other RDBMs to the library.

The interface consists of a set of abstract classes - Database,

Connection and Cursor.

Communication with the database flows exclusively through this interface and its

implementation (OracleDatabase,

OracleConnection and OracleCursor). The

interface Database provides a logical representation

of a database (e.g. an Oracle instance), Database

can create one or more connections (Connection) to

the database. The Connection interface represents

the communication channel with the database. Using the implementations of the Connection interface it is possible to send SQL statements

to database and receive responses in the form of cursors (Cursor).

The response consists of a set of one or more rows that can be iterated through

the Cursor.

Every persistent class maintainable by the POLiTe library has to be described by

a set of pre-processor macro calls. These calls are included directly into the class

definitions or near them. Description of the class attributes and the necessary

mapping information has to be provided together with declaration of every persistent

class. Metainformation covers class name, associated database table, parents, all

persistent attributes with their data types and corresponding table columns and

more. For complete list see [08].

Example 3.1, “Definition of a class in the POLiTe library” displays definition

of our classes Person and Student

in the POLiTe library.

Example 3.1. Definition of a class in the POLiTe library

class Person : public PersistentObject {

// Declare the class, its direct predecessor(s) ...

CLASS(Person);

PARENTS("PersistentObject");

// ... and its associated table

FROM("PERSON");

// Define member attributes

dbString(name);

dbShort(age);

// Primary key OID is inherited from PersistentObject

// Map other attributes

MAP_BEGIN

mapString(name,"#THIS.NAME",50);

mapShort(age,"#THIS.AGE");

MAP_END;

};

// Define method returning pointer to the prototype

CLASS_PROTOTYPE(Person);

// Define the solitary prototype instance Person_class

PROTOTYPE(Person);

class Student : public Person {

CLASS(Student);

PARENTS("Person");

FROM("STUDENT");

dbString(studcardid);

MAP_BEGIN

mapString(studcardid,"#THIS.STUDCARDID",20);

MAP_END;

};

CLASS_PROTOTYPE(Student);

PROTOTYPE(Student); // Student_class

class Employee : public Person {

CLASS(Employee);

PARENTS("Person");

FROM("EMPLOYEE");

dbInt(salary);

MAP_BEGIN

mapInt(salary,"#THIS.SALARY);

MAP_END;

};

CLASS_PROTOTYPE(Employee);

PROTOTYPE(Employee); // Employee_class

Because the library needs to keep track of the dirty status of persistent objects,

the programmer has to maintain this flag either by himself or better he should restrict

manipulation with persistent attributes to the use of getter and setter methods

defined by the macros. For every class T described

by these macros the library creates an associated template class prototype Proto<T>. The solitary instance of this prototype

class holds information about the metamodel described by the macros and provides

the actual database mapping. Prototypes are registered within the

ClassRegister. Using ClassRegister, the

library and/or application can search for prototypes by their names, and access

methods needed for CRUD[12]

operations.

Persistent classes inherit their behaviour from one of four base classes defined

in the library - the Object,

ImmutableObject, DatabaseObject or

PersistentObject class. Depending on what the parent is, different

features of the persistence are supported:

-

Object- instances of descendants of this class can be obtained as database query results. These objects do not have any database identity and can represent results from complex queries containing aggregate functions. More instances can thus be the same. -

ImmutableObject- instances of this class have a database identity mapped to one or more column(s) in the associated table (or view) and represent concrete rows in database tables or views. They can be loaded repetitively, but theImmutableObjectclass descendants still do not propagate changes made to them back to the database. To use this class as a query result, the query has to return rows that match rows in corresponding database tables or views. -

The

DatabaseObjectclass is much the same as theImmutableObject, but changes are propagated back to the database. -

The

PersistentObjectclass offers the most advanced persistence options. ThePersistentObjectdefines and maintains a unique attribute OID that holds the identity of everyPersistentObject's instance within the database. Unlike previous classes, persistence of whole type hierarchies is expected and supported.

As mentioned before, the library offers vertical mapping for descendants of the

PersistentObject. Tables related to mapped inheritance

hierarchies are joined using the surrogate OID key. If using

DatabaseObject descendants, the object model can be created upon an existing

(and in case of ImmutableObject descendants even

upon the read-only) database tables with arbitrary keys. In this case, however,

no inheritance between classes is allowed.

Associations in the POLiTe library are not modelled primarily as references but

as instances of the Relation class. There are five

subclasses of this class - OneToOneRelation, OneToManyRelation, ManyToOneRelation,

ManyToManyRelation and ChainedRelation

according to cardinality of the association. Their names describe which kind of

relation between the underlying tables they manage. ChainedRelation

is built from other relations and it can be used to define relation for indirectly

associated objects.

Example 3.2, “Associations in the POLiTe library” demonstrates how a one-to-many

relation between the Employee and

Student classes can be created and used.

Example 3.2. Associations in the POLiTe library

OneToManyRelation<Employee, Student> Employee_Student_Supervisor( "EMPLOYEE_STUDENT_SUPERVISOR", dbConnection ); // Let's suggest that the Supervisor variable represents // a reference to a "Ola Nordmann" Employee persistent object // and Student represents a reference to a "Richard Doe" // persistent object. // Create a supervisor relation between "Ola Nordmann" // and "Richard Doe". Employee_Student_Supervisor.InsertCouple( *Supervisor, *Student );

The relation can be queried for objects on both of its sides. So we may run queries like "Which students are supervised by Ola Nordmann?", "Who is the supervisor of Richard Doe?" or even more complex ones, but that would be out of the scope of this thesis.

The one-to-many relation can be replaced by a reference to s supervisor in the Student class definition:

... dbPtr(supervisor); dbString(studcardid); MAP_BEGIN mapPtr(supervisor,"SUPERVISOR"); ...

Usage of the references is closer to the object-oriented approach in which we navigate using pointers or references to gain access to the related objects. The drawback is, that the navigation is usually one-way and in this case, the retrieval of all supervised students of an employee is not trivial.

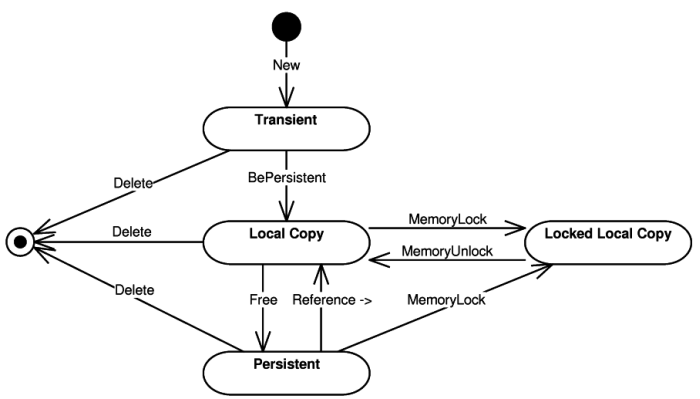

Persistent objects can enter one of the following states (see the state diagram in Figure 3.2, “POLiTe persistent object states”):

-

Transient - Each new instance of persistent class enters this state. The instance data are stored only in the application memory and are not persisted.

-

Local copy - A persistent image of the transient instance can be created by calling the

BePersistent()method. The method inserts attribute values of the instance to the database. The memory instance can be deallocated at any time as it is considered as a cached copy of the inserted database data. This state can be entered also at a later time when loading a persistent instance which has no local copy in the application memory. -

Locked local copy - To prevent the local copy deallocation, the local copy can be locked in the application memory. Local copy is not deallocated until its lock is released. After unlocking, the locked local copy enters the local copy state.

-

Persistent instance - A persistent object can enter this state if its local copy is removed from the application memory. During the state transition, the changes in the local copy are usually propagated to the database. The object exists now only in the database; it can be loaded later and enter one of the local copy states.

All local copies and local locked copies are managed by the

ObjectBuffer which acts as a trivial object cache. The buffer is implemented

as an associative container between object identities and local object copies. If

the buffer is full, all non-locked local copies are freed and dirty instances updated

in the database.

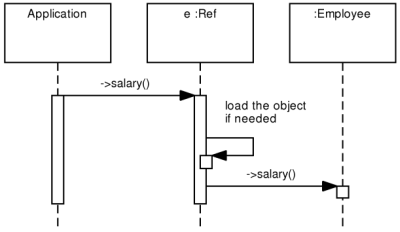

Because a persistent object can exist in one of those states, library uses indirect

references to access the object's attributes. Users do not have to know whether

the object is loaded into the object cache or if it exists only in the database.

Users can just access it via the Ref<T> reference

type using the overloaded -> operator. The library

looks for the requested instance in the object cache and if not found, it loads

it from the database. C++ chains the operator ->

calls until it gets to a type that does not overload the ->

operator and there it accesses the requested attribute or calls the requested function.

So if the variable e is of the

Ref<Employee> type, the following expression:

e->salary(65000);

does not invoke the setter method on the Ref<Employee>

instance, but it looks for the object in the object cache, loads it eventually,

and invokes the setter method on it. The process is illustrated by the Figure

3.3, “POLiTe references”.

POLiTe allows the users to specify several concurrent data access strategies the

ObjectBuffer will use. These strategies are used

to influence the safety or speed of concurrent access and cached data coherence.

-

Updating strategy - determines whether changes done to local copies are propagated to the database immediately or they can be deferred.

-

Locking strategy - determines how the rows in the database are locked when they are loaded into local copies. Shared, exclusive or no locking can be requested.

-

Waiting strategy - if the application tries to access a locked database resource (by another session), this strategy specifies whether the application waits until the resource gets unlocked or an exception is thrown.

-

Reading strategy - determines behaviour of the persistence layer if a local copy is accessed using the indirect reference

Ref<T>. Local copy can be either used right away or it can be refreshed with the data stored in the database. The refresh option can be speeded up by comparing timestamps of the local copy and of the stored image.

Object manipulation is illustrated by the Example 3.3, “Persistent object

manipulation”. Two objects - an employee and a student which is supervised

by that employee are created as transient instances and inserted into the database.

The BePersistent() call returns references to unlocked

local copies of created objects. Then the salary of the employee is modified and

the change propagated to the database. In the end, the student object is deleted

from the database and also from the memory.

Example 3.3. Persistent object manipulation

// Create a new employee

Employee* e = new Employee();

e->name("Ola Nordmann");

e->age(45);

e->salary(60000);

Ref<Employee> employee = e->BePersistent(dbConnection);

// Create a new student supervised by the employee created

Student* s = new Student();

s->name("Richard Doe");

s->age(22);

s->studcardid("WCD-3223");

s->supervisor(empl);

Ref<Student> student = s->BePersistent(dbConnection);

// Update the employee's salary

e->salary(65000);

e->Update(); // Propagates the change to the database

// The change could be propagated immediately if the

// updating strategy was set to the "immediate" setting.

// Delete the new student from the database

s->Delete();

);

Queries in the POLiTe library search for objects of a specified class. Search criteria

restricting the result set can be specified. Queries are represented as instances

of the Query class which contains only two data fields:

The search criteria (in fact the WHERE clause of the final SELECT statement together

with the ORDER BY clause specification) determines what object will be returned

and how the result will be ordered. The search criteria can be written using SQL

(referencing physical table and column names) or using a C++-like syntax. The C++-like

syntax hides the O/R mapping complexity and allows the users to use more convenient

class and attribute names. The query objects can be then combined using the C++

!, && and || logical operators. Results of the query execution are accessed

using instances of the Result<T> template class.

The template is used similarly to the Ref<T>

template.

Example 3.4, “Queries in the POLiTe library” illustrates how the queries

are created, combined and executed.

Example 3.4. Queries in the POLiTe library

// All employees with salary > 40000

Query q1("Employee::salary > 40000")

// All employees with the first name Ola

Query q2("Person::name LIKE 'Ola %');

// All employees with salary > 40000 having the first name Ola

Query q3 = q1 && q2;

// Order the result by the salary descending.

q3.OrderBy("Employee::salary DESC");

// Execute q3 and iterate through the result

Result<Employee>* result = Employee_class(q3, dbConnection);

while (++(*result)!=DBNULL) {

// members of the current object are accessible

// using (*result)->

};

result->Close();

delete result;

The library provides solid and rich-featured ORM solution. However, there are several areas in which the library can be improved:

-

Transparency - persistent classes have to be precisely described by macros. Typos in this description may lead to unclear compile time or runtime errors. Attributes must be accessed via the getter and setter methods.

-

Library design - library is one monolithic block and compiles into one shared library. There is no other way to add additional database drivers or features than changing the makefile and recompiling the library. Same applies to the library configuration - many parameters are configured as preprocessor macros. Changing them implies library recompilation.

-

Database dependency - without modifications, the library supports only the Oracle platform. Adding new database support supposes to derive new descendants of

Database,ConnectionandCursorclasses, implement their code and recompile the library. The library also contains several SQL fragments that are not separated into the database driver layer.

These disadvantages are addressed mostly by the IOPC library [04] and its descendant described further in this thesis. But first, we will look at the performance enhancement provided by the succeeding library POLiTe 2.

A new version of the POLiTe library focuses on the library performance and on the

design of new rich-featured cache layer. The cache layer replaces the

ObjectBuffer interface and enhances the concept of indirect memory pointers

by adding one more indirection level.

Architecture of the library remained almost unchanged. It is still compiled into one module and used the same way as the original POLiTe library was. Overview of the architecture can be seen in the Figure 3.4, “Architecture of the POLiTe 2 library”

Database layer uses OCI 7 to connect to the Oracle 7 database. The layer has been slightly modified to be able to notify the cache layer of several events like Commit or Rollback.

On the contrary, both the object cache ObjectBuffer

and the object references have been completely replaced by the new cache layer and

related infrastructure - database pointers. The differences are explained in the

following sections.

Because the new cache layer may use additional maintenance threads, most parts of the library have been made thread-safe. Various synchronisation primitives have been added to the common services. The main architectural change is that all basic database CRUD operations are routed through the cache layer which decides when or how to execute them.

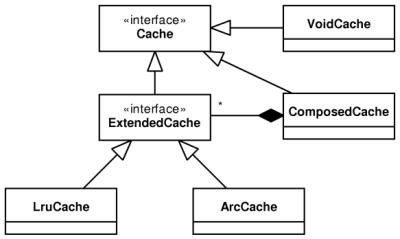

The biggest part of the cache layer represent the provided cache implementations. Cache implementations may derive from one of two interfaces depending on what features they will provide (see the Figure 3.5, “Caches in the POLiTe 2 library”):

-

Cache- an interface providing basic synchronous caching functionality. -

ExtendedCache- extends theCacheinterface with additional methods that are needed for asynchronous maintenance of the cache using the cache managerCacheKeeper(see later).

Concurrent data access strategies as introduced in the original POLiTe library can

be used with caches that implement the ExtendedCache

interface.

Caches can be grouped together using the ComposedCache

class with the help of selectors. Selectors are classes

whose instances determine which strategy or which cache should be used based on

the given object type, the object itself or based on other custom criteria. The

ComposedCache uses CacheSelector

to decide which cache should be used for the object being processed and then it

passes a StrategySelector to the selected cache as

a parameter to each of its operations. Then the cache uses the

StrategySelector to modify its behaviour with selected strategies.

Caches that implement the extended interface can be maintained by the cache manager

called CacheKeeper[13]. CacheKeeper runs

a second thread that scans managed caches and removes instances considered as 'worst'.

If dirty, these instances are written back to the database.

CacheKeeper acts also as a facade for composing caches and specifying

strategies.

Following cache implementations are provided with the POLiTe 2 library:

-

VoidCache- implements the basicCacheinterface. This implementation actually does not cache anything, it just holds locked local copies. As the copies are unlocked, changes are propagated immediately to the database and objects are removed from the cache. -

The

LRUCache(multithreaded) and theLRUCacheST(single threaded) implement theExtendedCacheinterface. These classes use the LRU[14] replacement strategy. The multithreaded variant can even be used with theCacheKeepers' asynchronous maintenance feature. LRU strategy discards least recently accessed items first. It maintains the items in ordered list. New or accessed items are added or moved to the top of the list. Least recently accessed items are pushed towards the bottom from which they are removed. For more information see [09]. -

The

ARCCache(multithreaded) and theARCCacheST(single threaded) use the ARC[15] replacement strategy. ARC tries to improve LRU by splitting the cached items into two LRU lists - one for items that were accessed only once (recent cache entries) and second for items that were accessed more than once (frequent cache entries). The cache adapts the size ratio between these two lists. ARC performs in most cases better than LRU, espetially in cases where a larger set of items is accessed (for example a query result is iterated). The cache is not polluted as frequently used items remain in the second list. For more information see [10][11].

Transient object instances are in the original POLiTe library created using standard

C++ dynamic allocation - using the new operator. After

making these objects persistent, pointers to these objects are exchanged for indirect

references represented by the Ref<T> template.

As the ownership of these objects is transferred to the object cache, the direct

pointers may be rendered invalid. In the POLiTe 2 library, the creation and destruction

of the objects is managed by the library code and users can access its members by

dereferencing indirect pointers.

The indirect pointer template Ref<T> was replaced

by the template DbPtr<T>. Its instance may

be referred to as a database pointer further in the text.

DbPtr<T> is used similarly to the

Ref<T> template. Example

3.5, “Persistent object manipulation in the POLiTe 2 library” demonstrates

the creation of a transient instance and its manipulation analogously to the

Example 3.3, “Persistent object manipulation”.

Example 3.5. Persistent object manipulation in the POLiTe 2 library

// Create a new employee

DbPtr<Employee> e;

e->name("Ola Nordmann");

e->age(45);

e->salary(60000);

e->BePersistent(dbConnection);

// Create a new student supervised by the employee created

DbPtr<Student> s;

s->name("Richard Doe");

s->age(22);

s->studcardid("WCD-3223");

s->supervisor(empl);

s->BePersistent(dbConnection);

// Update the employee's salary

e->salary(65000);

// There is no Update method as the cache updates the persistent

// image automatically according to the Updating strategy in use.

// Delete the new student from the database

s->DbDelete();

);

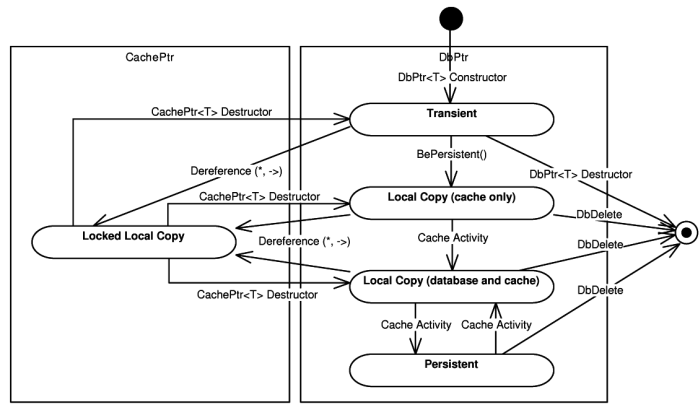

The DbPtr<T> can point to instances in several

states:

-

Transient instances which are present only in memory (and do not have a persistent representation yet), the owner of these instances is the pointer.

-

In-memory instances owned by a cache (which may or may not have a database representation. This represents in fact two states of the object).

-

Persistent representation of the object. The pointer contains only the identity of the object.

Last object state, the locked local copy, is implemented as an additional level

of indirection when dereferencing the database pointer. By dereferencing it using

the * operator, user receives an instance of a

cache pointer (CachePtr<T>). The existence

of the cache pointer guarantees that the object is loaded into the memory and that

the memory address of the object will not change until the instance of the cache

pointer is destroyed (unlocked). Such process locks the objects in the cache so

they cannot be removed unless they are unlocked. The transitions between the object

states are displayed in the

Figure 3.6, “States of the POLiTe 2 objects”.

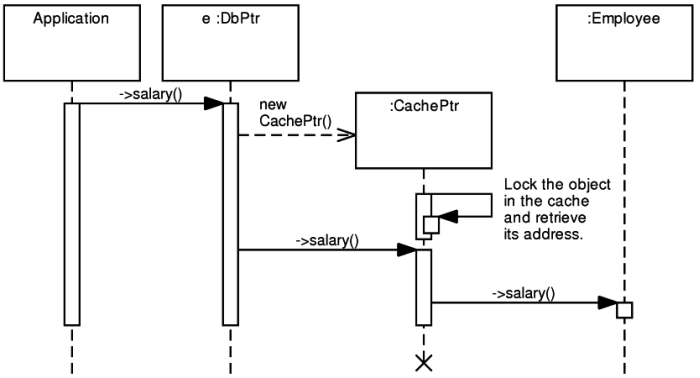

By using the * operator on a database pointer user

receives a reference to a loaded and locked instance in a cache. Constructor of

the created cache pointer asks cache to load relevant object from the database (if

not already present in the cache) and locks the object in the cache. The cache pointer

can be dereferenced again resulting in retrieval of direct pointer to the in-memory

instance. This process can be simplified just by using the ->

operator on the database pointer. An implicit instance of the cache pointer is created

and the -> operator invoked on it. Then the requested

member of the instance is accessed. After that, the cache pointer instance is destroyed

and cache lock released. (This may result in significant performance degradation

if using the VoidCache, because only locked copies are contained in the cache. Calling

the -> operator causes a persistent object to be

loaded from database into the memory, then desired member is accessed and object

- if modified - is written back and removed from cache). The process is almost the

same as if dereferencing the Ref<T> references,

the difference is the additional level - the cache pointer.

If the variable e is of the DbPtr<Employee>

type, the following expression:

e->salary(65000);

may trigger a sequence of actions as displayed in the Figure 3.7, “Dereferencing DbPtr in POLiTe 2”

The query language and query classes as described in the section called “Querying” have not been changed in the POLiTe 2 library. Just the query is now executed and its results fetched in a slightly different way:

Example 3.6. Executing queries in the POLiTe 2 library

// All employees with salary > 40000

Query q("Employee::salary > 40000")

// Execute q and iterate through the result

Result<Employee> result(dbConnection, q);

while (++result) {

// members of the current object are accessible

// using result->

};

result->Close();

New version of the POLiTe library allows users to utilise advanced persistent object caching features. Unmentioned remained the support for multithreaded environment which includes encapsulation of several synchronisation primitives.

Disadvantages listed in the the section called “Conclusion” also apply to this version of the library as they were not the point addressed by the thesis [03].

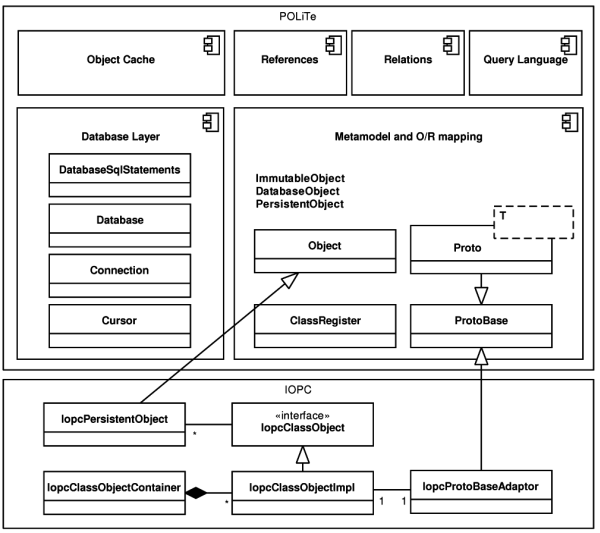

The IOPC library [04] contains a new API for O/R mapping. This new interface coexists with the old POLiTe-style interface inside one library. The interface is (with few exceptions) clearly divided into two parts - the IOPC and the POLiTe part. The POLiTe part provides backward compatibility with the original POLiTe library.

The IOPC library offers all features of the original POLiTe library. We will look only at the new IOPC interface. New features and main differences are listed below:

-

No need to describe the structure of persistent classes. Persistence works almost transparently.

-

All three basic types of class hierarchy mapping are supported - horizontal, vertical and filtered. Combinations of these types in one class hierarchy are also allowed (with a few exceptions).

-

Database views that join data from all mapping tabes are generated. These views are used for object loading and they also represent a simple read-only interface for non-library users.

-

Ability to define new persistent data types.

-

Loading persistent object attributes by groups. Persistent attributes can be divided into several groups which can be loaded separately.

-

Easy implementation of new RDBMS into the library[16].

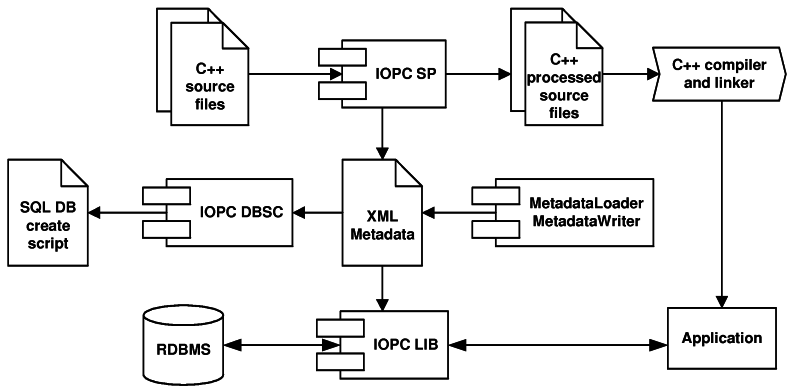

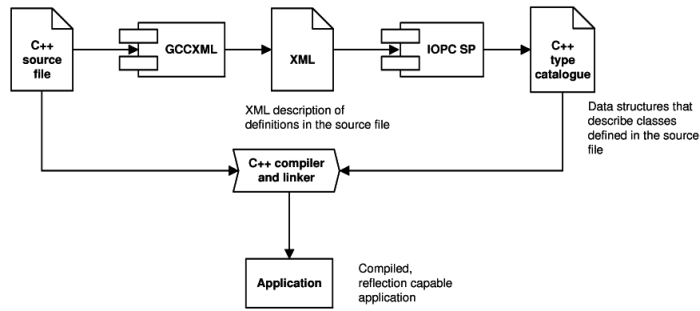

The IOPC library consist of a number of modules, some of them are standalone applications. The hi-level architecture overview can be best explained on the library workflow displayed in Figure 3.8, “The IOPC library workflow”.

Three main modules can be observed from the figure:

-

IOPC SP uses OpenC++[17] parser and source-to-source translator to modify the source code to add support of the object persistence and generates XML metamodel description of structure of persistent structure.

-

IOPC DBSC generates SQL scripts from the XML metamodel description. The SQL scripts create required database structured needed for the IOPC object-relational mapping.

-

IOPC LIB is a library that provides the object persistence services to a program to which it is linked. It uses the metamodel generated by the IOPC SP module and database structures created using the IPOC DBSC scripts.

-

XML metamodel description loading and storing is performed by two statically-linked libraries / classes -

XMLMetadataLoaderandXMLMetadataWriter. Both classes use the Xerces[18] parser to handle the XML files. Other type of metamodel storage can be implemented by inheriting from theMetadataLoaderandMetadataWriterinterfaces.

One of the most interesting aspects of the IOPC library is a new approach to metamodel description retrieval. User-created specification of the class structure is not needed. The source classes are parsed and metamodel description is collected by the IOPC SP module.

IOPC SP is a standalone executable created from patched OpenC++ source code and

a OpenC++ metaclass IopcTranslator. The metaclass

affects the source-to-source translation of persistent classes done by the OpenC++

by performing the following operations:

-

Generates the set and get methods for all persistent attributes. Setters modify the dirty status of the objects and getters ensure that corresponding attribute groups are loaded.

-

Modifies every reference to persistent attributes so that they use the generated get and set methods.

-

Generates additional members to the processed classes needed by the IOPC LIB.

-

Inspects the processed classes and writes information about their structure to a XML file by calling the

MetadataWriter(its implementationXMLMetadataWriter).

In the end, IOPC SP runs compiler and linker on the translated source code.

As you can see in the

Example 3.7, “Definition of persistent classes in IOPC”, persistent classes

in IOPC does not need any additional descriptive macros for the persistence layer

to be able to understand their structure. The only rules are that they need to be

descendants of the IopcPersistentObject and that

the attribute types need to be supported by IOPC. For example, IOPC does not understand

the std::string STL type, only the basic C/C++ string

representation char* (or its wide character variant)

is supported. If the string is allocated dynamically, it needs to be deallocated

in the desctructor.

Example 3.7. Definition of persistent classes in IOPC

class Person : public IopcPersistentObject {

public:

char* name;

short int age;

Person() {

name = NULL;

}

virtual ~Person() {

if (name != NULL) free(name);

}

};

class Employee : public Person {

public:

int salary;

};

class Student : public Person {

public:

char* studcardid;

Ref<Employee> supervisor;

Student() {

studcardid = NULL;

}

virtual ~Student() {

if (studcardid != NULL) free(studcardid);

}

};

Processed source code generated by the IOPC SP is deleted immediately after compilation.

It is not meant to be modified by developers. Following example displays the processed

Student class. It was stripped by the age attribute

because the listing was too long:

class Person : public IopcPersistentObject {

public:

Person() {

set_name ( __null ) ;

}

virtual ~Person() {

Free();

if ( m_name != __null ) free ( m_name ) ;

}

protected:

char * m_name;

bool m_name_isValid;

public:

virtual char * get_name()

{

if (!m_isPersistent || m_name_isValid || !m_classObject->isAttributePersistent(1)) return m_name;

m_classObject->loadAttribute(1, this);

if (!m_name_isValid)

throw IopcExceptionUnexpected();

return m_name;

}

public:

virtual char * set_name(char * _name) {

m_name =_name;

m_name_isValid = true;

if (m_isPersistent) MarkAsDirty();

return _name;

}

protected:

void iopcInitObject(bool loadingFromDB) {

if (loadingFromDB) {

m_name_isValid = false;

}

else {

m_classObject = IopcClassObject::getClassObject(ClassName(), true);

m_name_isValid = true;

}

}

virtual int iopcExportAttributes(IopcImportExportStruct * data, int dataLen) {

if (dataLen != 1) return 1;

data[0].valid = m_age_isValid;

if (m_age_isValid) data[0].shortVal = m_age;

return 0;

}

virtual int iopcImportAttributes(IopcImportExportStruct * data, int dataLen) {

if (dataLen != 1) return 1;

if (data[0].valid) {

if ((m_name_isValid) && (m_name)) free(m_name);

m_name = strdup(data[1].stringVal);

m_name_isValid = true;

}

return 0;

}

public:

static const char * ClassName() {return "Person";}

public:

static IopcPersistentObject * iopcCreateInstance() {

IopcPersistentObject * object = new Person;

object->iopcInitObject(true);

return object;

}

static RefBase * iopcCreateReference() {

return new Ref<Person>;

}

};

static IopcClassRegistrar<Person> Person_IopcClassRegistrar("Person");

Rather larger amount of code was inserted into the class definition. IOPC SP generated

setter and getter methods for the attributes, serialisation and deserialisation

routines (iopcExportAttributes and

iopcImportAttributes) and other methods needed by the persistence layer.

Not only the class code was translated. Code that reads or sets attribute values was changed to use the getter and setter methods (like we would use in the POLiTe library):

// original: Employee e; e.age = 45; // translated assignment operation: e.set_age(45);

IOPC SP also generates a XML metamodel file describing structure of the classes, see Example 3.8, “XML metamodel description file”.

Example 3.8. XML metamodel description file

<iopc_mapping project_name="example">

<class name="Employee">

<base_class>Person</base_class>

<mapping db_table="EMPLOYEE" type="inherited">

<group name="default_fetch_group" persistent="true">

<attribute db_column="SALARY" db_type="NUMBER(10)" name="salary" type="int"/>

</group>

</mapping>

</class>

<class name="Person">

<mapping db_table="PERSON" type="vertical">

<group name="default_fetch_group" persistent="true">

<attribute db_column="AGE" db_type="NUMBER(10)" name="age" type="short"/>

</group>

<group name="1st_persistent_group" persistent="true">

<attribute db_column="NAME" db_type="VARCHAR2(4000)" name="name" type="char *"/>

</group>

</mapping>

</class>

<class name="Student">

<base_class>Person</base_class>

<mapping db_table="STUDENT" type="inherited">

<group name="default_fetch_group" persistent="true">

<attribute db_column="SUPERVISOR" db_type="NUMBER(10)" name="supervisor" type="Ref<Employee>"/>

</group>

<group name="1st_persistent_group" persistent="true">

<attribute db_column="STUDCARDID" db_type="VARCHAR2(4000)" name="studcardid" type="char *"/>

</group>

</mapping>

</class>

</iopc_mapping>;

As mentioned before, persistent attributes can be divided into several groups which

are handled by IOPC separately. By default, two groups are generated - a

default_fetch_group containing all numeric attributes and a

1st_persistent_group containing attributes of all remaining data

types (strings). The XML metamodel file can be customized by developers before running

IOPC DBSC.

IOPC DBSC is a standalone executable that generates SQL scripts for various purposes

- in particular the scripts to create or delete database structures required by

the object-relational mapping. It uses MetadataLoader

to load the persistent class metamodel written by the IOPC SP. SQL script created

from the previously generated XML metamodel file follows.

First it creates database table for the class list and fills it:

CREATE TABLE EXAMPLE_CIDS

(

CLASS_NAME VARCHAR2(64)

CONSTRAINT EXAMPLE_CIDS_PK PRIMARY KEY,

CID NUMBER(10) NOT NULL

CONSTRAINT EXAMPLE_CIDS_UN UNIQUE

);

INSERT INTO EXAMPLE_CIDS VALUES ('Employee', 1);

INSERT INTO EXAMPLE_CIDS VALUES ('Person', 2);

INSERT INTO EXAMPLE_CIDS VALUES ('Student', 3);

Followed by a table (main table) containing OIDs of all persistent objects in the project:

CREATE TABLE EXAMPLE_MT

(

OID NUMBER(10)

CONSTRAINT EXAMPLE_MT_PK PRIMARY KEY,

CID NUMBER(10)

CONSTRAINT EXAMPLE_MT_FK REFERENCES EXAMPLE_CIDS(CID)

);

CREATE INDEX EXAMPLE_MT_CID_INDEX ON EXAMPLE_MT(CID);

Then the mapping tables associated with persistent classes are created (we used the default vertical mapping):

CREATE TABLE PERSON

(

OID NUMBER(10)

CONSTRAINT PERSON_PK PRIMARY KEY

CONSTRAINT PERSON_CD REFERENCES EXAMPLE_MT(OID) ON DELETE CASCADE,

CID NUMBER(10)

CONSTRAINT PERSON_FK2 REFERENCES EXAMPLE_CIDS(CID),

AGE NUMBER(10),

NAME VARCHAR2(4000)

);

CREATE INDEX PERSON_CID_INDEX ON PERSON(CID);

CREATE TABLE EMPLOYEE

(

OID NUMBER(10)

CONSTRAINT EMPLOYEE_PK PRIMARY KEY

CONSTRAINT EMPLOYEE_CD REFERENCES EXAMPLE_MT(OID) ON DELETE CASCADE,

SALARY NUMBER(10)

);

CREATE TABLE STUDENT

(

OID NUMBER(10)

CONSTRAINT STUDENT_PK PRIMARY KEY

CONSTRAINT STUDENT_CD REFERENCES EXAMPLE_MT(OID) ON DELETE CASCADE,

STUDCARDID VARCHAR2(4000),

SUPERVISOR NUMBER(10)

);

Finally, two views are created for each persistent class. Simple

views (SV suffix) join all tables needed for

loading complete instances of particular persistent classes. Their columns correspond

to attributes of the associated classes. Polymorphic views

(PV suffix) have same structure as simple views: the

difference is that they return not only instances of the associated classes, but

also instances of their descendants.

CREATE VIEW Person_sv AS SELECT OID, AGE, NAME FROM PERSON WHERE CID = 2; CREATE VIEW Person_pv (CID, OID, AGE, NAME) AS SELECT CID, OID, AGE, NAME FROM PERSON WHERE PERSON.CID IN (2, 1, 3); CREATE VIEW Employee_sv (OID, AGE, NAME, SALARY) AS SELECT EMPLOYEE.OID, AGE, NAME, SALARY FROM PERSON, EMPLOYEE WHERE EMPLOYEE.OID = PERSON.OID; CREATE VIEW Employee_pv (CID, OID, AGE, NAME, SALARY) AS SELECT 1, EMPLOYEE.OID, PERSON.AGE, PERSON.NAME, SALARY FROM PERSON, EMPLOYEE WHERE EMPLOYEE.OID = PERSON.OID; CREATE VIEW Student_sv (OID, AGE, NAME, STUDCARDID, SUPERVISOR) AS SELECT STUDENT.OID, AGE, NAME, STUDCARDID, SUPERVISOR FROM PERSON, STUDENT WHERE STUDENT.OID = PERSON.OID; CREATE VIEW Student_pv (CID, OID, AGE, NAME, STUDCARDID, SUPERVISOR) AS SELECT 3, STUDENT.OID, PERSON.AGE, PERSON.NAME, STUDCARDID, SUPERVISOR FROM PERSON, STUDENT WHERE STUDENT.OID = PERSON.OID;

IOPC LIB is a shared library that represents the core of the IOPC project. It is linked to the outputs (object files) of IOPC SP and provides the run-time functionality.

IOPC LIB is built on the POLiTe library, it uses some of its components and exposes

its new interface side-by-side with the original POLiTe interface. The result is

that the IOPC library can be used almost the same way as its predecessor. It supports

the POLiTe-style persistent objects as well as new persistent objects inherited

from the IopcPersistentObject class and processed

with IOPC SP. Components from the POLiTe library that IOPC LIB uses are displayed

in

Figure 3.9, “POLiTe library components used in IOPC LIB”.

Because the object cache and the object references are reused from the POLiTe library, persistent object enter same states using same state transitions as in the section called “Persistent object manipulation”. There is, however, one problem with the current implementation in that it does not implement the locking correctly and all persistent objects look like unlocked all the time. So the Locked Local Copy state can be entered only by instances of the POLiTe persistent classes.

The query language and all related classes or templates are also reused, so there is no change in this area either.

IOPC persistent objets can be associated exclusively using references as described

at the end of the

the section called “Metamodel and object-relational mapping.”. Relations

described earlier in that section can be used only for the POLiTe persistent objects.

IOPC adds a new RefList<T> template to the

standard POLiTe Ref<T> reference representing

a persistable list of references. The list is stored into a separate table (one

per project) which unfortunately does not have standard many-to-many join table

schema. Each instance of the RefList<T> is

stored as a linked list of OIDs of its members. The table is completely unusable

in SQL queries unless we are using its recursive features (if available) or procedural